…and what a business should and perhaps shouldn’t use it for

If you’re a content writer – as I am – the announcement of public access to Open AI’s ChatGPT was a bit of an ‘oh shit’ moment. Tinged with admiration.

TL;DR summary…

- ChatGPT is very impressive

- It – and its competitors – are going to get much more impressive very quickly

- It’s not perfect though and makes mistakes (big ones) which means you shouldn’t rely on it 100%

- It’s going to either massively shake up most jobs and/or lots of jobs will be redundant

- Short/medium and long term effects will be different and some suggestions as to what to do now

A bit of history

Mind you, it shouldn’t have come as a surprise to anyone. Years ago, shortly after businesses worked out that ranking at the top of Google search engine results depended on good quality content (and lots of it), the hunt was on for something that would make producing that content quicker and easier.

The first step Google took to combat these ploys was downgrading any web page that was filled with content copied from another, earlier web page – ‘duplicate content’. The page with the copied text would simply not be included in the results at all.

This in turn led to the development of ‘spinning’ software, which would take an existing text and ‘spin’ it by replacing some words with synonyms and juggling up the word order a bit. All great in theory but in practice some of these spun texts you could spot from a country mile and they gave you a mild headache just reading them. They didn’t fool Google for very long.

Now

But now we have software – call it ‘Artificial Intelligence’ if you like – that can do a pretty good job of writing copy that reads just like it was written by a competent writer. About a year ago various AI content generators (think Jasper – previously called Jarvis – and Copysmith, ArticleForge etc.) started to produce much better copy than the spinners. These were mainly powered by GPT-3 – the system that powers OpenAI‘s ChatGPT – the only downside being that you had to pay for them.

The seemingly sudden emergence of ChatGPT took a lot of people by surprise, including Google, who issued an internal ‘red alert’ – ‘everyone to the boardroom now’ type situation (see more below). Microsoft less so as they were – and are – a backer of OpenAI and now have a version of it installed in their browser, Bing.

Google has of course also been developing its own model called LaMDA (which powers Google’s version of ChatGPT – BARD), which unfortunately recently stuffed up when it was demonstrated to the public by giving incorrect information in an answer.

The written content component of what ChatGPT, and systems like it, can do is only a part of the picture. ChatGPT can for example also…

- write computer code in a range of different (computer) languages

- understand a large number of (human) languages and reply in those languages, or others

- remember the content of a previous question and expand upon its previous answer

- regenerate a response to a question in a different way

- take note of the style of writing you want and write its answer in that style

- observe word limits you ask it to

Coming soon will be upgrades to ChatGPT which will make it ‘multimodal’, which essentially means you will be able to submit words and images and audio, and ChatGPT will be able to take all of these into account in its response. It will also soon be able to search content in one language and use that content to contribute to its answer in another language. So say there is a lot of information on the web in Mandarin that is relevant to your question, it will be able to use this information to produce its answer, which it can give you in English or any other language in its repertoire.

Is there any hope?

So, what hope is there for writers, artists and musicians (yes there’s AI for music composition too) with this onslaught of AI ‘creativity’?

Right now none of these systems is perfect. Google’s BARD got its facts wrong and ChatGPT gets things wrong too. The problem is, the answers are phrased in such a casually authoritative way that you hesitate to challenge them. The other thing is that ChatGPT doesn’t normally tell you where it got its information from, although you can ask it.

Other issues relate to intellectual property rights attached to sources it’s using and whether ChatGPT is in breach of those rights (an argument that is slightly further ahead with conventional artists and their work, some of whom are already suing) and possible inbuilt bias in its answers based on any (biased) sources it is using.

The outlook from here

So here are my thoughts, split into three – long term, medium term and short term.

Long term

It’s not looking good for us humans. If a small number of large businesses control the main AI players then this is a recipe for a huge transfer of wealth to them from everyone else. Plus of course there’s the Skynet situation where the AI takes over everything. The profit motive currently trumps everything else and unless governments legislate what can and can’t be done by AI then we’ll see a version of the Wild West on steroids, and – obviously – potentially much worse.

Medium term

Google’s business model may be upended in the same way it upended all the previous sources of information twenty odd years ago (eg Encyclopaedia Britannica). This will mean that one of the main reasons for wanting to use ChatGPT – to create content to keep Google happy – will be irrelevant, as the Google search engine won’t exist in its current form. It’ll be much easier to ask the ChatGPTs and BARDs for an answer than to scroll through results on Google trying to find the answer yourself. The question will be whether you trust those answers!

Short term

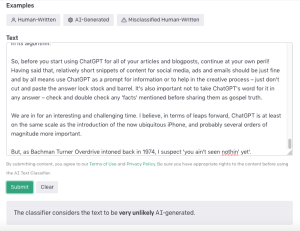

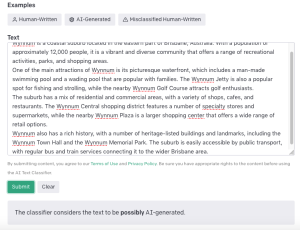

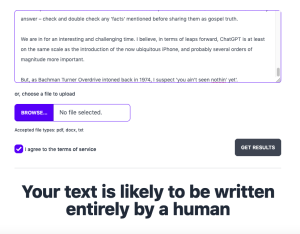

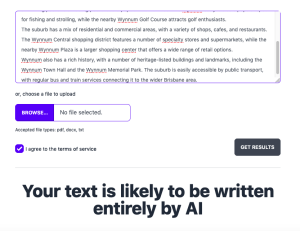

Google isn’t going away just yet, and it will certainly be beefing up its ability to detect content that isn’t ‘written by humans for humans’. There are already a number of different tools that can help you determine if content is likely written by AI or human generated, such as OpenAI’s text classifier (https://platform.openai.com/ai-text-classifier) and another called GPTZero (https://gptzero.me/).

So if these tools are already out there you can bet your bottom dollar that Google will have something similar in its algorithm.

Suggestions

So, before you start using ChatGPT for all of your articles and blogposts, continue at your own peril! Having said that, relatively short snippets of content for social media, ads and emails should be just fine and by all means use ChatGPT as a prompt for information or to help in the creative process – just don’t cut and paste the answer lock stock and barrel on to your website if you want to rank on Google. It’s also important not to take ChatGPT’s word for it in any answer – check and double check any ‘facts’ mentioned before sharing them as gospel truth.

We are in for an interesting and challenging time. I believe, in terms of leaps forward, ChatGPT is at least on the same scale as the introduction of the now ubiquitous iPhone, and probably several orders of magnitude more important.

But, as Bachman Turner Overdrive intoned back in 1974, I suspect ‘you ain’t seen nothin’ yet’.

Further Reading

For a more nuanced discussion of detection of AI generated content…

https://theconversation.com/we-pitted-chatgpt-against-tools-for-detecting-ai-written-text-and-the-results-are-troubling-199774

Main image credit: https://www.pexels.com/@kindelmedia/